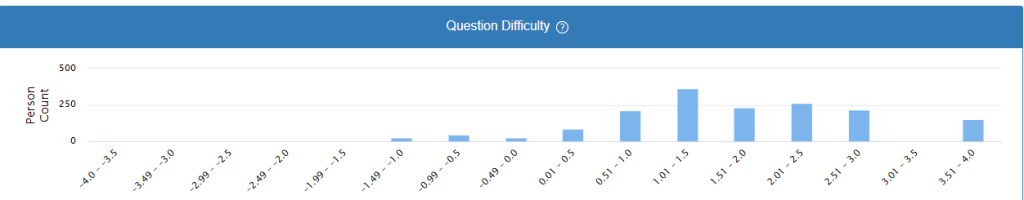

Question Difficulty – Rasch Distribution (Technical)

In Rasch measurement theory, question difficulty represents the level of ability required for a person to have a 50% probability of answering an item correctly. This difficulty is measured on a logit scale (log-odds units), which provides a linear, interval-level measurement that allows for meaningful mathematical operations and comparisons.

The Logit Scale

The logit scale is centered at zero, where:

- Negative values (-4.5 to 0) indicate easier items that require lower ability levels

- Positive values (0 to +4.0) indicate more difficult items that require higher ability levels

- Each unit represents a constant amount of difficulty across the entire scale

Interpreting the Distribution Graph

The graph displays a person-question map showing the relationship between question difficulty and person ability distribution:

Key Features:

- X-axis: Question difficulty in logits (ranging from approximately -4.5 to +4.0)

- Y-axis: Person count (number of examinees at each ability level)

- Distribution shape: The bell-curved pattern shows how examinees’ abilities are distributed relative to question difficulties

What This Tells Us:

- Targeting: The peak around 1.0-2.0 logits indicates where most examinees are clustered in terms of ability, and this should align with the average difficulty of your assessment items for optimal measurement precision.

- Coverage: Items spanning from -4.5 to +4.0 logits provide measurement coverage for a wide range of ability levels, from very low-performing to very high-performing examinees.

- Measurement Precision: Areas with higher person counts (taller bars) indicate where measurement will be most precise, as more examinees are available to calibrate item difficulties.

Practical Implications

Well-targeted assessment – When person abilities and item difficulties are well-matched (overlapping distributions), you achieve:

- Maximum measurement precision

- Appropriate challenge level for most examinees

- Reliable differentiation between ability levels

- Efficient use of assessment time

Gaps or misalignment between person abilities and item difficulties may indicate needs for:

- Additional items at specific difficulty levels

- Revised targeting for your intended population

- Consideration of adaptive testing approaches

This Rasch-based difficulty calibration ensures that your assessment provides meaningful, comparable measurements across different examinees and testing occasions.